My She/it Wolf Dog Girlfriend Ate Your Golden Retriever Bf… Sorry 😢

my she/it wolf dog girlfriend ate your golden retriever bf… sorry 😢

More Posts from Zephiris and Others

I wanna see how much of the tumbr user base is queer so I made this 😋

Reblog for larger sample size! ☺️

All fancy smancy generative ai models know how to do is parrot what they’ve been exposed to.

A parrot can shout words that kind of make sense given context but a parrot doesn’t really understand the gravity of what it’s saying. All the parrot knows is that when it says something in response to certain phrases it usually gets rewarded with attention/food.

What a parrot says is sometimes kinda sorta correct/sometimes fits the conversation of humans around it eerily well but the parrot doesn’t always perfectly read the room and might curse around a child for instance if it usually curses around its adult owners without facing any punishment. Since the parrot doesn’t understand the complexities of how we don’t curse around young people due to societal norms, the parrot might mess that up/handle the situation of being around a child incorrectly.

Similarly AI lacks understanding of what it’s saying/creating. All it knows is that when it arranged pixels or words in a certain way after being given some input it usually gets rewarded/gets to survive and so continues to get the sequence of words/pixels following a prompt correct enough to imitate people convincingly (or that poorly performing version of itself gets replaced with another version of itself which is more convincing).

I argue that a key aspect of consciousness is understanding the gravity and context of what you are saying — having a reason that you’re saying or doing what you are doing more than “I get rewarded when I say/do this.” Yes AI can parrot an explanation of its thought process (eli5 prompting etc) but it’s just mimicking how people explain their thought process. It’s surface level remixing of human expression without understanding the deeper context of what it’s doing.

I do have some untested ideas as to why its understanding is only surface level but this is pure hypothesis on my part. In essence I believe humans are really good at extrapolating across scales of knowledge. We can understand some topics in great depth while understanding others similarly on a surface level and go anywhere in between those extremes. I hypothesize we are good at that because our brains have fractal structure to them that allows us to have different levels of understanding and look at some stuff at a very microscopic level while still considering the bigger picture and while fitting that microscopic knowledge into our larger zoomed out understanding.

I know that neural networks aren’t fractal (self-similar across various scales) and can’t be by design of how they learn/how data is passed through them. I hypothesize that makes them only understand the scale at which they were trained. For LLM’s/GAN’s of today that usually means a high level overview of a lot of various fields without really knowing the finer grain intricacies all that well (see how LLM’s make up believable sounding but completely fabricated quotes for long writing or how GAN’s mess up hands and text once you zoom in a little bit.

There is definitely more research I want to do into understanding AI and more generally how networks which approximate fractals relate to intellegence/other stuff like quantum physics, sociology, astrophysics, psychology, neuroscience, how math breaks sometimes etc.

That fractal stuff aside, this mental model of generative AI being glorified parrots has helped me understand how AI can seem correct on first glance/zoomed out yet completely fumble on the details. My hope is that this can help others understand AI’s limits better and therefore avoid putting too much trust into to where AI starts to have the opportunity to mess up serious stuff.

Think of the parrot cursing around children without understanding what it’s doing or why it’s wrong to say those words around that particular audience.

In conclusion, I want us to awkwardly and endearingly laugh at the AIs which mimic the squaks of humans rather than take what it says as gospel or as truth.

why don't the mitochondria cause cancer. if i was a mitochondria i would be suddenly struck by tremendous joie de vivre and remember my youth as a bacteria and be like holy shit why am i listening to this fucker. im gonna eat all my surroundings im freeeeee. ig probably its bc they depend a bunch on proteins encoded in the nucleus but like. cmon dude

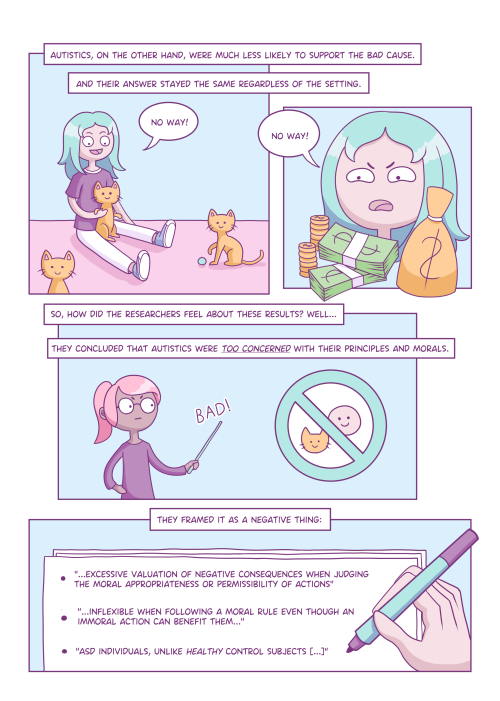

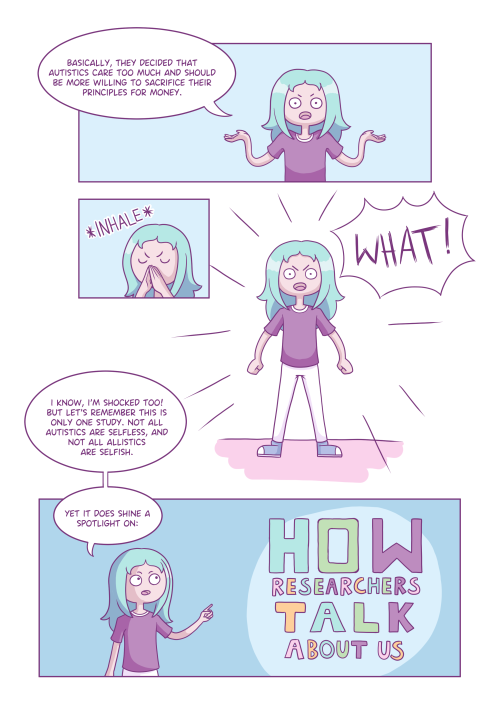

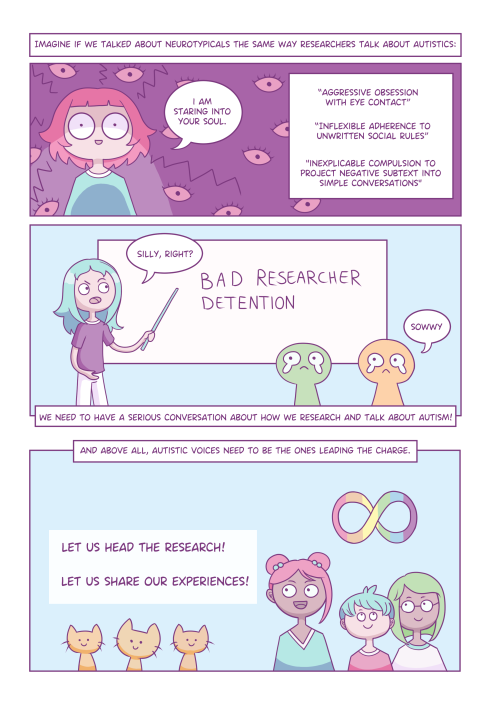

There has been a lot of research about autistics over the years, but this one really took the cake!

This is what happened when researchers attempted to compare the moral compass of autistic and non-autistic people…

made this tiny edit to this work by @mxsparks@oulipo.social, so now it's a very convoluted dual language pun

guess this is an art blog now /j

unfinished song synth rock thing. idk if i'm gonna finish it so i'm posting it. I want to post more on this site but i don't really have anything to show because i'm not actively working on any personal projects besides music

-

thiuesque reblogged this · 3 months ago

thiuesque reblogged this · 3 months ago -

silvercrane14 liked this · 3 months ago

silvercrane14 liked this · 3 months ago -

erosionn liked this · 3 months ago

erosionn liked this · 3 months ago -

mangoes-machinery reblogged this · 3 months ago

mangoes-machinery reblogged this · 3 months ago -

goblin-king-reign liked this · 3 months ago

goblin-king-reign liked this · 3 months ago -

crushcrushcrush-mp3 reblogged this · 3 months ago

crushcrushcrush-mp3 reblogged this · 3 months ago -

dipthestick reblogged this · 3 months ago

dipthestick reblogged this · 3 months ago -

jaymilepland liked this · 4 months ago

jaymilepland liked this · 4 months ago -

woofularunit liked this · 6 months ago

woofularunit liked this · 6 months ago -

labradoriteheadache liked this · 6 months ago

labradoriteheadache liked this · 6 months ago -

misokisses reblogged this · 7 months ago

misokisses reblogged this · 7 months ago -

chaoticmagpies liked this · 7 months ago

chaoticmagpies liked this · 7 months ago -

dykinguptheaether liked this · 7 months ago

dykinguptheaether liked this · 7 months ago -

stillnotalfonso liked this · 7 months ago

stillnotalfonso liked this · 7 months ago -

quanticide liked this · 7 months ago

quanticide liked this · 7 months ago -

breadedcallie reblogged this · 7 months ago

breadedcallie reblogged this · 7 months ago -

miiiwu liked this · 7 months ago

miiiwu liked this · 7 months ago -

midnightvibrant reblogged this · 7 months ago

midnightvibrant reblogged this · 7 months ago -

friendpillow liked this · 7 months ago

friendpillow liked this · 7 months ago -

x-creature-feature-x liked this · 7 months ago

x-creature-feature-x liked this · 7 months ago -

bluesableeye reblogged this · 7 months ago

bluesableeye reblogged this · 7 months ago -

thatdykepunkslut reblogged this · 7 months ago

thatdykepunkslut reblogged this · 7 months ago -

supersleepyslowpoke reblogged this · 7 months ago

supersleepyslowpoke reblogged this · 7 months ago -

wolfsskull liked this · 7 months ago

wolfsskull liked this · 7 months ago -

saniello liked this · 7 months ago

saniello liked this · 7 months ago -

gaytvs liked this · 7 months ago

gaytvs liked this · 7 months ago -

lotus45 liked this · 7 months ago

lotus45 liked this · 7 months ago -

administer-distractions reblogged this · 7 months ago

administer-distractions reblogged this · 7 months ago -

thatdykepunkslut liked this · 7 months ago

thatdykepunkslut liked this · 7 months ago -

mccha reblogged this · 7 months ago

mccha reblogged this · 7 months ago -

mccha liked this · 7 months ago

mccha liked this · 7 months ago -

gayboysteve liked this · 7 months ago

gayboysteve liked this · 7 months ago -

thefancifulsmaug liked this · 7 months ago

thefancifulsmaug liked this · 7 months ago -

k1llermustang liked this · 7 months ago

k1llermustang liked this · 7 months ago -

astheniadawn reblogged this · 7 months ago

astheniadawn reblogged this · 7 months ago -

astheniadawn liked this · 7 months ago

astheniadawn liked this · 7 months ago -

daturainnoxia liked this · 7 months ago

daturainnoxia liked this · 7 months ago -

tayne-dot-exe reblogged this · 7 months ago

tayne-dot-exe reblogged this · 7 months ago -

prettycryptid reblogged this · 7 months ago

prettycryptid reblogged this · 7 months ago -

prettycryptid liked this · 7 months ago

prettycryptid liked this · 7 months ago -

dniceone13 liked this · 7 months ago

dniceone13 liked this · 7 months ago -

albi-mander reblogged this · 7 months ago

albi-mander reblogged this · 7 months ago -

albi-mander liked this · 7 months ago

albi-mander liked this · 7 months ago -

mantis-lizbian reblogged this · 7 months ago

mantis-lizbian reblogged this · 7 months ago -

mantis-lizbian liked this · 7 months ago

mantis-lizbian liked this · 7 months ago -

ceaseless-birder liked this · 7 months ago

ceaseless-birder liked this · 7 months ago -

ask-the-vargonians liked this · 7 months ago

ask-the-vargonians liked this · 7 months ago -

moderatetoaboveaverage reblogged this · 7 months ago

moderatetoaboveaverage reblogged this · 7 months ago -

alex-the-mediocre liked this · 7 months ago

alex-the-mediocre liked this · 7 months ago -

lbctal reblogged this · 7 months ago

lbctal reblogged this · 7 months ago

20, They/ThemYes I have the socks and yes I often program in rust while wearing them. My main website: https://zephiris.me

132 posts